This is a rant full of sarcasm. Reader discretion advised. However, if you believe that „customers won’t pay for quality“ and that „testing your apex code slows you down“ this text is for you. You are part of the problem.

In the last couple of years, I have had the opportunity to work at a broad variety of salesforce operations. I have built small orgs from scratch and worked as a developer in a large team with a multi-million budget. The projects and companies I worked at could not have been more different in scope and goal. But all teams (including myself) had one thing in common: They absolutely sucked at testing their Apex code.

5 Signs Your Tests Suck, Too

Salesforce implementations are no different than every other software: Most of them are crap. I think this is because the market is flooded with junior developers, the entry barriers are quite low and the salaries are relatively high. Additionally, the whole ecosystem is pretty immature, considering most technologies we work with are less than 5 years old.

It deeply offends me when folks with no understanding of software engineering fiddle together an app and call themselves Salesforce Programmers. Programming is so much more. It’s about writing software that scales, that is maintainable, that is extendable and that is understandable. Commonly known as Clean Code.

There are plenty of success factors that you should watch to understand if your project is healthy. Some of them are related to the organization, some of them are related to your application architecture and some of them are purely artisanal. I consider the ability to work professionally as the single most important success factor. The second important success factor is the craftsmanship of the team’s test suite. If you think of the professionalism of your development process as the upper limit your operation can reach, consider the quality of your test suite as your ability to utilize this limit.

So take a look at this from the opposite side. What are signs, that your team fails at this important success factors? Here are five that I found useful to look for.

The First Sign: You Do Not Trust Your Tests

Your test suite should be the first thing you check, once you change something. It should be the first thing you show to your client as proof, that the feature he pays you for actually works. If all your tests pass, you feel confident that you can ship the feature. You don’t feel the need to eyeball anything, just in case. On the contrary, if only one test fails, you are immediately on high alert because you assume that something important is broken.

Let’s be honest: How often did you see tests fail in a staging environment and deployed anyway? How often did you comment out failing tests for a deployment, so they wouldn’t even execute? How often did you simply skip a full regression test run and try to tailor your deployment around „minimal test execution“? I’ve seen it way too often. And yes, I’ve done it myself.

… Because Your Tests Are Redundant And Irrelevant

Why would you write irrelevant tests in the first place? This may seem counter-intuitive, but I learned this the hard way: Most tests I initially wrote were completely irrelevant. Not by their intent, but by their implementation. But how can you tell? Turns out, this is pretty easy. If you see a test fail and think for yourself „This is not related to the tested functionality“ or „the assert fails, but I know the feature still works“: That’s it. Anytime a test fails, you should assume the tested functionality is broken. Nothing else. If this is not the case 95% of the time, your tests are crap.

If a test fails and the failure is not directly related to the functionality under test being broken, it typically means the test is too tightly coupled. Usually, such tests execute redundant or irrelevant actions. I will dedicate a post on how to use Mocks and Stubs to build loosely coupled tests and how to design your Apex classes for testability. You also want your tests DRY. Make sure, that every test verifies exactly one „thing“ you care for. If this thing breaks, exactly one test should fail with it. Never put redundant asserts in your tests, that are not directly related to the „thing“ the test verifies.

… Because Your Architecture Sucks

Salesforce heavily markets its low-code features such as the Flow Designer or the Process Builder. Yes, a flow helps when you only have to sync the address of related contacts with the addresses of their account. But these sort of requirements quickly get out of hand. Think about an additional requirement to automatically sync the shipping address with the billing address. Or a validation rule that accounts require both addresses filled once they are a customer? How about enabling duplicate rules that use the address? You will quickly end up with a behemoth of a flow that is unmaintainable and completely untested.

These low code automation tools are pretty powerful and one of the reasons, the platform is so successful. And don’t get me wrong: They are not per se bad. But they are typically developed and deployed in an unprofessional manner (meaning no automated test suite and no deployment process). This causes side-effects like failing tests that just suddenly break on production. It is not important what tools you used to implement a certain functionality. Instead, it is imperative to develop, test and deploy all functionality with the same level of professionalism.

The Second Sign: You Struggle With Code Coverage

Code coverage should never be a metric you pursue. Instead, you achieve code coverage as a side effect of other endeavors. Well-written software easily achieves 100% line coverage. If your’s doesn’t, it’s not well written. Does every component of your system need to be well written? I guess not. But you should aim for nothing less. If everything single line of code is covered with at least one test, you at least can tell that all your code can be executed. Does this mean it is bug-free? Hell no. But it does mean, that every single line of code does, what it is intended to do. Even though the intention may be wrong or missing something, at least nobody can accidentally bust it.

So why do so many people struggle to achieve code coverage? There are countless of posts on the Salesforce StackExchange and Success Forum asking „how to get code coverage“. I think it’s essentially because most people do not even understand, why we test.

… Because You Write Tests For Your Code, Not Your Functionality

If I skim the top questions, they all read like this: „I have this snippet, and I need to write a test case that covers it, but I cannot get coverage for these 10 lines. Can you please provide test code for me that covers these lines?“.

Let me be absolutely clear: If code coverage is a problem for you, you apparently lack the most basic understanding of software engineering. You should never strive for coverage for coverage’s sake. The only acceptable answer to a question like this should be: „Throw it away and start over“. Some of the most useful reads I recommend to anybody getting into programming are Refactoring by Martin Fowler and Clean Code by Robert C. Martin. After understanding and applying the techniques they teach, 95%+ code coverage comes naturally.

… Because Your Coding Style Is Bad

If your 150 lines static method has 5 levels-deep nested if-statements inside loops and a try-catch-block wrapped around it, you are going to have a hard time testing it. A method should be understandable (yours probably is not) and do „only one thing“ (yours probably does not).

There are plenty of design patterns and best practices out there, that improve the testability of your code in some way or the other. But I have never experienced a single technique that was more powerful than Test-Driven Development. TDD is a topic for itself, so I will not go into the pros, cons, and limitations of TDD. But there is one thing, that blows your mind once you try it. If you force yourself to write tests simultaneously while you write your code, you subconsciously design your classes and methods to be easy to test, easy to read, and easy to re-use. So we can skip the discussion whether TDD helps you to write bug-free code, if 100% line coverage is useful or if it is really necessary to always write tests first. TDD helps you write better code, quicker.

… Because Your Architecture Sucks

Yes, this is going to be a recurring theme: Most of the time bad architecture is responsible for failing deployments, delayed delivery, and unstable features. „Use a flow to implement this, you don’t need to write tests for them“ is probably the single most stupid statement one can make. Spoiler: You don’t write tests because salesforce requires you to achieve 75% coverage of Apex code.

A professional developer writes tests to ensure, that a feature works as specified. It proofs to others, that the functionality works. Additionally, tests act as a fail-safe, if someone or something accidentally breaks said functionality. Tests are supposed to cover your functionality, not your code. And zero- or low-code automations are functionality as well. If you don’t test your functionality just because you used a zero-code tool to implement it, you are not being professional.

The Third Sign: Your Tests Don’t Tell You Anything

You want your tests to immediately tell you about the complete state of your system: What is working? And, the most important: what is broken? You don’t want to spend 15 minutes investigating the failing test to understand, what exactly is broken. You want to derive this information directly from the test results as displayed, so you can spend your precious time investigating, why it is broken and how to fix it.

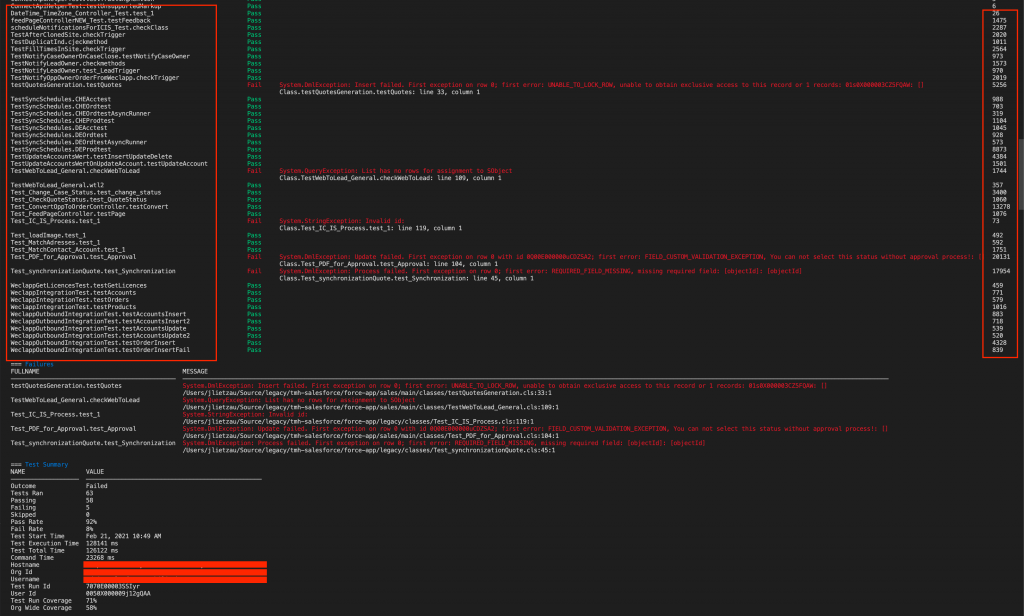

Have you ever worked in an org with absolutely zero documentation? And when you hustled your way through the implementation to pin down a bug, the full test run came back like this?

Thanks for nothing. Really gave me some confidence in what was going on in the org and especially which functionality was working and which was broken. Test_MatchAddresses.test_1, TestWebToLead_General.wtl2 or TestDuplicatInd.cjeckmethod and all the test cases in TestSyncSchedules really were a great help in understanding what was going on.

… Because You Suck At Naming Your Tests

The irony here is, that Salesforce actually promotes such unprofessional and amateurish practices. If you take a look at their official resources, they’re full of examples with runPostitiveTests and test1 test method names.

The point being: Giving your tests these cryptic names is just rude. How is anyone expected to understand the original intention? How am I supposed to understand from a test summary, what functionality broke due to my refactoring? How should anyone derive an understanding of how your code works from these tests? How are you expected to prove that a certain specification/feature is implemented? The answer is simple: You can’t. And that’s why every good test suite has self-explanatory names for both its classes and its methods. I prefer the

MethodUnderTest - RelevantInputOrState - ExpectedOutcome

notation. It works extremely well for unit tests on object-oriented code. Your test suite not only describes, what functionality an object is expected to have (the first part), but also how to invoke that functionality (second part) and how it is expected to behave (the third part). If a test fails, you can understand from the name of the test case, what object or method in what state did not produce the desired outcome. Pretty helpful.

… Because You Don’t Differentiate Between Unit Tests And E2E Tests

I know there is some discussion about what is a „Unit Test“ and what is an „E2E Test“. I use the term „Unit Tests“ when I refer to tests that work completely without database calls. They typically only test one state of one single method of an object. E2E Tests on the other hand are way more complex. They rely on test data being set up in the database and perform updates on those records. Then, they query the database to check, if something happened. Because they involve SOQL and DML, they also execute all validation rules, flows, and triggers on all objects (the domain layer). It is in their nature, that E2E Tests are much more fragile and fail much more often. And if they fail, you typically cannot derive what is broken without further investigation of the underlying source code.

Practically all the tests I wrote when I first started, were E2E tests. Some of these tests indirectly relied on business logic from other modules in their test setup, others even explicitly invoked business logic in their @TestSetup code. This introduces too many dependencies, and not only does it make the tests extremely fragile (if unrelated business logic breaks, the tests break, too), it also makes them completely untrustworthy. And if they fail, you have no clue what’s broken. Just that something’s broken. As a rule of thumb, a robust test suite should consist of 90% unit tests that use advanced mocking and stubbing techniques for isolation and 10% E2E tests to verify, that these individually tested components work together. The less „stuff“ is going on in single test, the easier it is to tell what is broken, if this test fails.

… Because You Don’t Use Asserts

This may sound completely retarded to some, but out of the 60 tests you see above, only 10 or so actually had assert statements. The rest of them only invoked the business logic to get code coverage and debugged some variables to the log. So the tests always passed, as long as the code did not throw exceptions. That is by far the most embarrassing thing I have ever seen.

Instead, use asserts. Structure each test according to the ARRANGE – ACT – ASSERT pattern. As a rule of thumb, add at least one and up to ten assert statements to the test test case. As a side note: If you feel the need to put more asserts in a single test, you are probably testing too much functionality and should split it into multiple test cases, instead. If you feel that it is hard to write unit tests for your code, your code sucks. So go back to your code and refactor it, until you find it easy to verify its behavior. It really is that simple.

The Fourth Sign: Your Tests Are Slow

You want your tests to be fast. You want to execute them often. And you want your tests to be easy to write and maintain, so you can quickly add new tests or modify existing ones. This is the only way to ensure, they do not hinder your development process. In order to achieve this, your tests must be well isolated from their dependencies and be lean.

Take a look at the example from above: Some of these test cases took more than 20 seconds to execute. Now imagine a test suite that actually tested something and wasn’t only there to generate code coverage. Such a test suite easily runs for 10 to 15 minutes straight, even with parallel execution turned on. Why is this a bad thing? Because the longer your test suite runs, the less it will be executed. Additionally, if a full test run is currently running on an org, another one cannot be queued. Therefore, your CI pipeline is blocked and you need some very advanced techniques to parallelise Sandboxes.

… Because You Do Not Use The Apex Testing Framework

The worst test setup code I’ve ever seen was a bunch of static variables in the static initializer code of the test class. Apex comes with a more or less useful testing framework that handles database cleanup after test run and isolates test records from production data. Use it. There is simply no excuse for not knowing that this exists and not using it. I will not further elaborate on that.

… Because You Do Not Use Mocks And Stubs

Another major problem with most tests I see is the complete absence of Mocks and Stubs. I don’t know why, but most developers seem to be afraid of them. Salesforce code typically works with SObject records, and DML is incredibly slow. Especially if complex SObject relationships are involved, the setup code is quite tedious to write and very fragile (creating products, setting prices, creating line items, etc). This is not only slow, but also introduces way too many dependencies on business logic.

Instead of setting up complex trees of records, use mocks. I will explain advanced techniques how to mock complex SObject relationships (i.e. Opportunities with Line Items and Products) without a single SOQL/DML in a future post. Together with a good class design (SOLID), you will be able to write 95% of your unit tests completely decoupled from your business logic. This will make them extremely robust and performant.

… Because You Execute Irrelevant Setup Code

Ever introduced new functionality and just added some test cases to an existing unrelated test class so you don’t have to bother with writing your own fixtures? Yeah … don’t do this: A test class should cover one „thing“, exactly how a class should do one „thing“. I’ve seen way too much re-use of test classes for pure laziness. This typically leads to bloated test setup code that tightly couples tests together that shouldn’t be coupled. As a side effect, it also decreases the performance.

As a rule of thumb, always introduce new test classes for new functional classes (this rule applies to unit tests only). Test setup code is not a necessary evil, it also explicitly documents dependencies of the functionality it tests. The tests themselves also act as documentation how the code works. This helps new developers to understand what the class does, how it can be invoked, and what dependencies it requires. Design your test classes with the Principle of Least Surprise in mind.

The Fifth Sign: Your Tests Fail Unreliably.

Your tests have to be reliable. If the functionality works, your tests are green. If your tests are red, only the related functionality under test is broken. You should strive for nothing less. This is absolutely mandatory in order for your team to trust your test suite.

Nothing better than deploying code that worked on your dev environment to your integration environment and suddenly seeing 20 tests from a completely unrelated package failing. Further inspection reveals, that test setup code suddenly produced an UNABLE_TO_LOCK_ROW exception (that’s going to be tough to fix) or REQUIRED_FIELD_MISSING (that’s going to be easy to fix). Why does this happen?

… Because You Have Too Many Dependencies

A typical rookie mistake I always see (and did way too often myself), is relying on business logic in your test setup that is not related to the functionality under test. Imagine you have a trigger that always updates the contact address with the address of the account. Now you implemented additional logic on contacts with addresses, that you are about to test. You write your own test class (sweet) and insert a new account with contacts. But you only specify the address on the account, because you expect the other implementation to sync it with the contact. Now you query the contacts with their address to do some asserts in your tests. And that’s stupid. If something changes, all your tests break.

It is important to insert contacts with addresses directly. Your test setup should cover all of the dependencies that your tests require to pass. If you feel that this does not work because other logic continuously interferes with your logic, this is a good indicator that you might need some refactoring for testability (use mocked contacts to test the business logic without DML) in this functionality. It is better to have an independent, isolated and robust test class that duplicates some of the setup steps, than to re-use overly complex fixtures that insert records that are not needed in your particular tests. Independency above redundancy.

… Because You Have Too Many Integration Tests

As I experienced it, most people cannot even tell the difference between unit tests and integration tests. So naturally, they write way too many integration tests. By their nature, these tests are fragile. They typically are the first to break. So the more integration tests you have, the more test failures you will see. The better your architects, the less this will happen.

There’s no easy way to solve this: You have to start with a solid architecture that makes good decisions as when to use Salesforce’s low code tools and when to use Apex. Your low code implementations must covered by your automated test suite, too. Your Apex code must be clean and designed for testability. Only then, you will achieve real mastery in testing Apex.

So… How To Not Suck At Testing?

Code quality and testing may be the most controversial issue discussed among developers. I think this might be related to the ignorance and hubris of the average software developer, that cannot acknowledge his own fallibility. The problem is: We all make mistakes. We get sloppy. And our systems almost always become more and more complex over time. But the human mind can only deal with a limited amount of complexity. As a result, we need to employ mechanisms that keep the complexity in check. If we don’t, our systems break in our hands. Nobody can maintain them. New developers take ages until they are up to speed.

Even though the conclusion already should be obvious, there are still way too many senior devs out there, who audaciously refuse to properly test their software. In my experience, it’s almost always a matter of will, not skill. So here’s the sixth sign, you suck at testing: You don’t even want to test, because you honestly believe that it is unnecessary, slows you down and is overly complicated. Congratulations, you are actively sabotaging your project and if you are a consultant, you are actively damaging your client.

I would conclude, that it is not very hard to become good at testing. The first and most important step is the pure will to future-proof your work by ensuring it’s functionality with an automated test suite. Once you started there, everything else comes naturally.

Closing Thoughs

By no means do I intend to discourage junior developers with this rant from starting a career with Salesforce. And I want to emphasise, that even though I referenced examples that involved the work of real people, I would never blame the programmer who originally made the mistakes I pointed out. When I take a look at earlier test I wrote, they all show some —if not all— of the same problems.

But there are two types of people I do blame: First, managers that deliberately make the decision to highly oversell inexperienced junior programmers and put them in situations, where they can do nothing but fail and their work actually jeopardises the project they work on. Second, seniors who missed the last 15 years of development around professionalism in software engineering and simply refuse to improve their craftsmanship. There are still people out there, who flat out deny the usefulness of testing and clean coding practices. These people seriously damage the reputation of the whole industry.