If you work on a larger Salesforce Org, you probably need a trigger framework to implement the single trigger per SObject best practice. This is especially challenging when you work on multiple, well-separated second-generation packages (2GP).

Requirements for a Trigger Framework

I wrote about the „single trigger per object“ topic a couple of months ago and for smaller Orgs, I still advise against introducing a framework too early. Smaller Orgs don’t need it – the overhead is usually not worth it. However, as your Org grows, you need to introduce such a framework eventually. In order to not hinder you, it should adhere to the following requirements.

- The framework must be progressive. It must co-exist with existing triggers that are not aware of the framework and we must be able to migrate existing logic one by one.

- The framework should encourage good coding practices. The code that is written for the framework should be DRY and SOLID.

- It must be designed for Second-Generation Packaging (2GP). Downstream dependencies must be able to register their logic to existing objects without the need to modify the framework. They also must be able to register new SObjects with the framework.

- Using and understanding the framework should be easy. The interface is expected to be as simple and straightforward as a

Queueableto implement. A trigger handler should have no boilerplate and existing code should be easy to migrate. - We wanted useful functionality to make our lives easier. A framework is not an end in itself. We want to enable/disable functionality on production (like we know from flows), we want to control the order of execution, and it should support our E2E testing strategy.

Can’t be too hard, can it?

Existing Trigger Frameworks And What They Are Lacking

Extensive research of existing frameworks and concepts showed that even though there is a plethora of blog posts written about the topic, none of the proposed frameworks satisfies all the requirements.

One can group them in broadly three and a half categories.

Custom Metadata-based Frameworks

The only concept that is truly designed with 2GP in mind. In general, these frameworks are acceptable. However, they all share one common flaw: The interface is way too complex. There is simply no excuse for methods with 5 or more input parameters, period.

Dan Appleman’s proposal in the 5th revision of „Advanced Apex Programming“ is a good start, but lacks both a concise and lean interface and simple configuration.

Both the Nebula Core and Google’s Trigger Actions implementation have a little bit leaner interfaces, but the same problems with overly complex configurations.

None of them offer utilities for developers to write better E2E tests.

Overly-complex Interfaces

These implementations lack all of our requirements. The interface is overly complex and forces a developer to implement 7 or more methods (depending on the variation). The framework itself encapsulates no complexity and offers no quality-of-life features for developers.

I have seen different variations of the same concept on many Salesforce blogs (Salesforce Ben, Apex Hours). All of them share the same fundamental design flaws and are not worth more investigation. I am pretty sure, 90% of them are blunt copy-paste.

Non 2GP-compatible Frameworks

There are multiple variations of the same old concept out there. They all lack the functionality to register handlers with the framework in downstream dependencies. As a result, the framework cannot load handlers dynamically at runtime but has to be called explicitly by the downstream dependency.

This makes them incompatible with 2GP.

Domain Implementations from DDD

Even though it is not an official trigger framework, the FinancialForce Apex Library supports similar use cases in its SObjectDomain implementation. It does not support the dynamic registration of handlers, so it is not suitable for 2GP.

Introducing the JSC Trigger Framework

Unfortunately, all concepts I found had one or more flaws and did not satisfy all my requirements. Some were not compatible with packaged-based development, others were simply too complex to be useul. To address these limitations, I built on the good concepts of the Custom Metadata-based frameworks and tried to improve them.

TriggerExecutable Interface and TriggerContext

We wanted the framework interface to be as straightforward and simple as possible. A good trigger framework for Apex exposes all standard functionality of the platform. At the same time, it is expected to reduce complexity by doing the heavy lifting for us.

public interface TriggerExecutable {

/**

* The framework automatically populates the `TriggerContext` with all

* variables from `System.Trigger`.

*

* @param context

*/

void execute(TriggerContext context);

}The TriggerContext encapsulates all variables from the static Trigger. This is much cleaner than having methods with 5 to 10 parameters while still giving full access to all standard functionality. The similarity to the Queueable or Schedulable interface is not a coincidence.

When the framework executes a handler, the context is automatically populated from the Trigger variables. The handler, therefore, has full access to all variables.

public class TriggerContext {

public System.TriggerOperation operation;

public Boolean isInsert;

public Boolean isUpdate;

public Boolean isDelete;

public Boolean isUndelete;

public Boolean isBefore;

public Boolean isAfter;

public Integer size;

public Schema.SObjectType sObjectType;

public Map<Id, SObject> newMap;

public Map<Id, SObject> oldMap;

public List<SObject> newList;

public List<SObject> oldList;

/**

* Convenience getter for the primary record list. Always returns a non-null list.

* Returns newList for insert, update and undelete operations. oldList for delete.

*

* @return `List<SObject>`

*/

public List<SObject> getPrimaryList() {

if (this.isInsert || this.isUpdate || this.isUndelete) {

return this.newList;

} else {

return this.oldList;

}

}

/**

* Convenience getter for record ids in this trigger context. Returns empty Set

* in before insert context.

*

* @return `Set<Id>`

*/

public Set<Id> getRecordIds() {

if (this.isInsert || this.isUpdate || this.isUndelete) {

return this.newMap.keySet();

} else {

return this.oldMap.keySet();

}

}

}Custom Metadata to Register Trigger Handlers

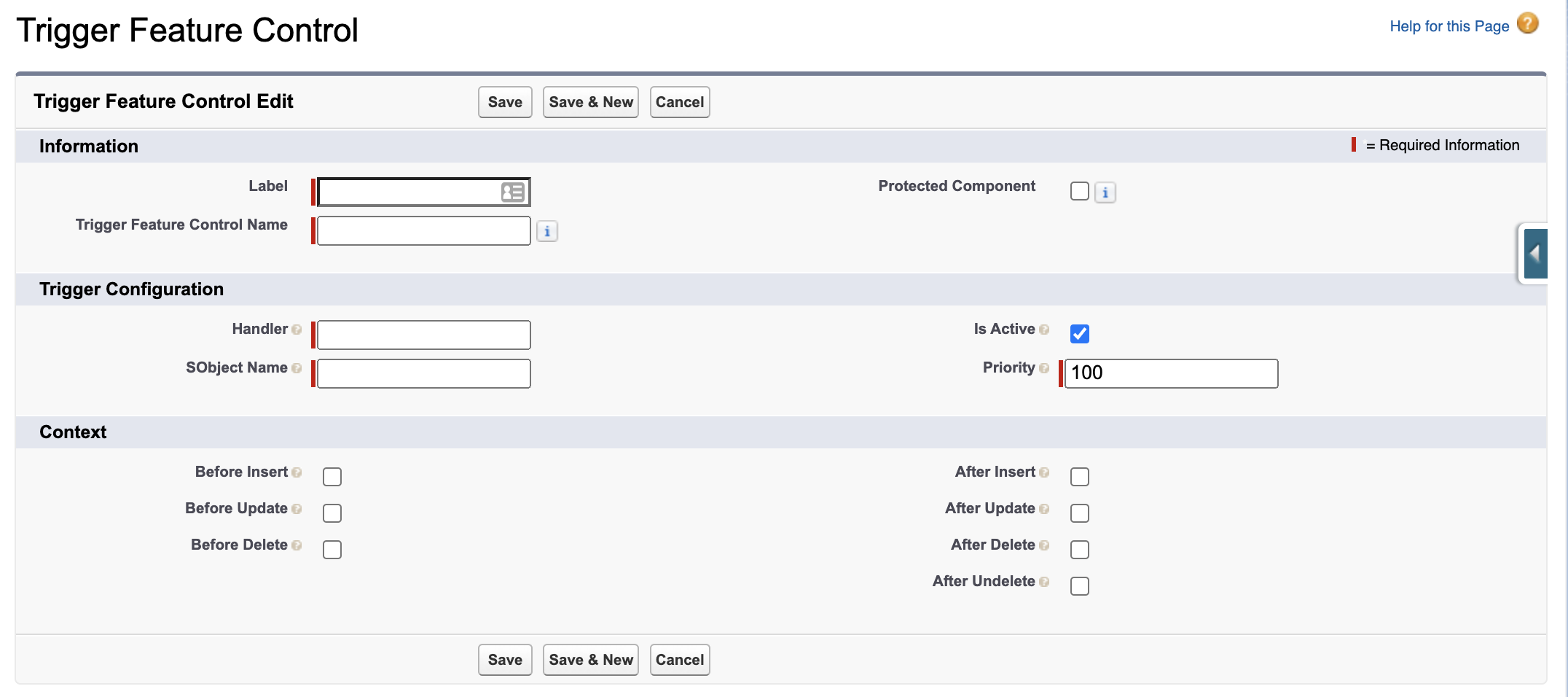

To control the order of execution and to register new handlers, we use custom metadata. The custom metadata allows to configure, when and how a handler is executed by the framework.

Why did I go with checkboxes to enable contexts, instead of a more normalized TRIGGER_OPERATION picklist with one record per trigger operation?

- First of all, I expect the trigger operations (insert, update, delete and undelete) to never change. So there is no real benefit from a more normalized data model.

- Second, I value understandability, manageability, and cohesion higher than database normalization. The benefits of quickly seeing where and how a specific functionality is executed, by far outweigh the downsides of slightly more complex records.

- Third, custom metadata is not deleted if packages are installed with default settings. Instead, they remain active on the target org and are only marked deprecated. This can cause nasty bugs on the target org. Having a single file to control the functionality has much more cohesion and is preferable.

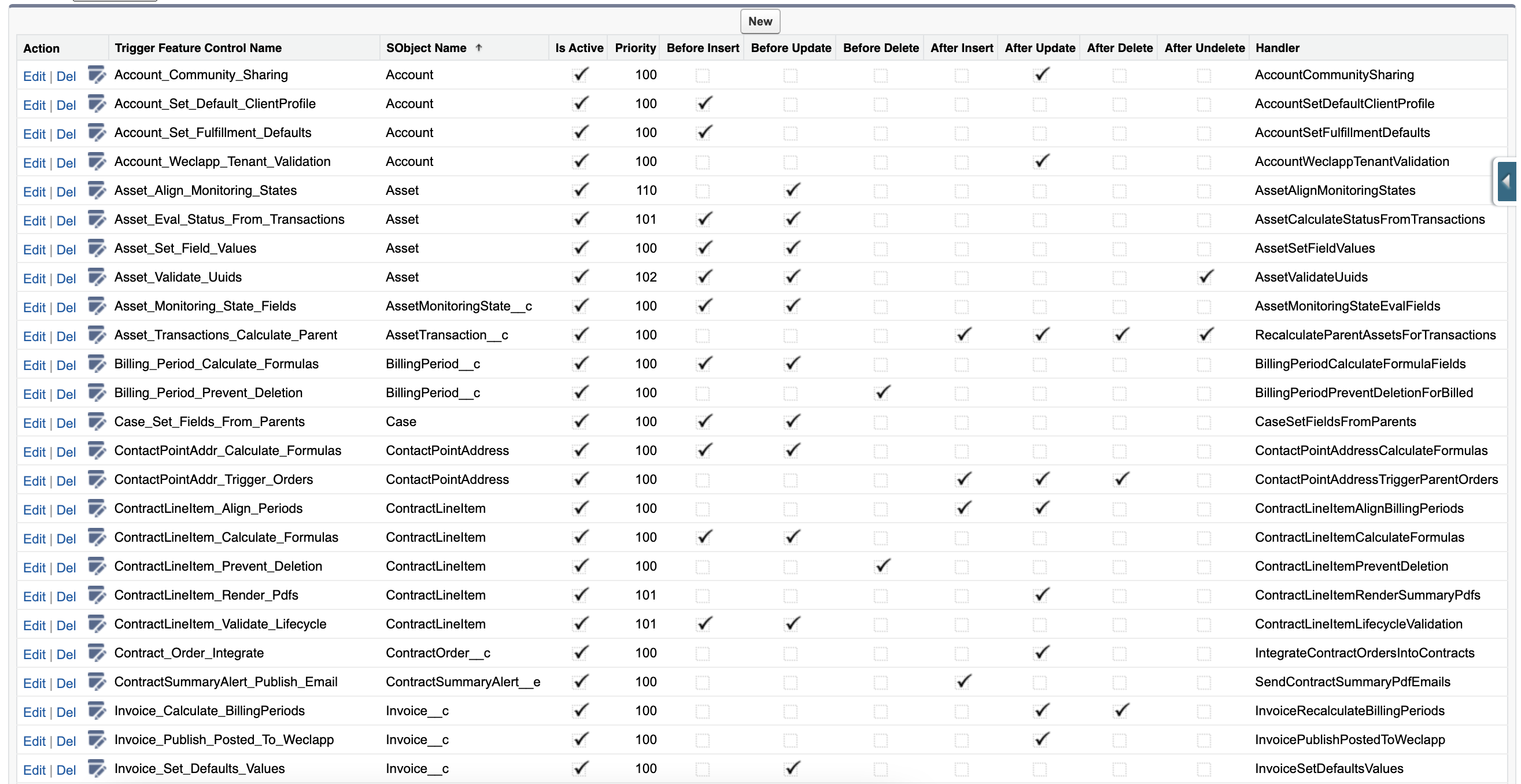

This is how the trigger handler controls may look like:

An Example Implementation of a TriggerExecutable

A framework is supposed to make your life easier. Therefore, implementing the TriggerExecutable must be as simple as writing all logic directly into the trigger. Trigger variables to control flow must easily accessible.

The framework allows you to write handlers that can be executed in multiple contexts or specific contexts. It’s your choice. The TriggerContext exposes structured access to all Trigger variables.

public class MyExampleHandlerImplementation implements TriggerExecutable {

public void execute(TriggerContext context) {

if (context.isInsert) {

// do something special in insert context only

}

// execute logic in all contexts, where the handler is configured to execute

for (Account a : (List<Account>) context.getPrimaryList()) {

// clean record data

}

}

}Remember: The executable can execute different logic based on the context it is executed. You can also decide to write separate handlers for „before insert“ and „before update“ and then explicitly execute them with two configurations. It is up to you, the developer.

For testability reasons, I strongly recommend always accessing the TriggerContext, instead of the Trigger variables directly.

Enabling The Framework On A Trigger

And here’s the last piece of the puzzle: The trigger that calls the framework. I recommend putting all triggers for standard objects in the package that implements the framework. If a downstream dependency introduces a custom object that needs to be enabled, the package itself should implement the trigger together with the custom object.

trigger onOpportunity on Opportunity(

before insert,

after insert,

before update,

after update,

before delete,

after delete,

after undelete

) {

Triggers.run(Schema.Opportunity.SObjectType);

}The Trigger Framework API

A developer does not need to know, how the Triggers API works internally. That’s a good thing because the framework is supposed to encapsulate complexity. That’s why I will not go over the actual implementation here. I added the full implementation into the js-salesforce-apex-utils package. Feel free to use it, according to its license.

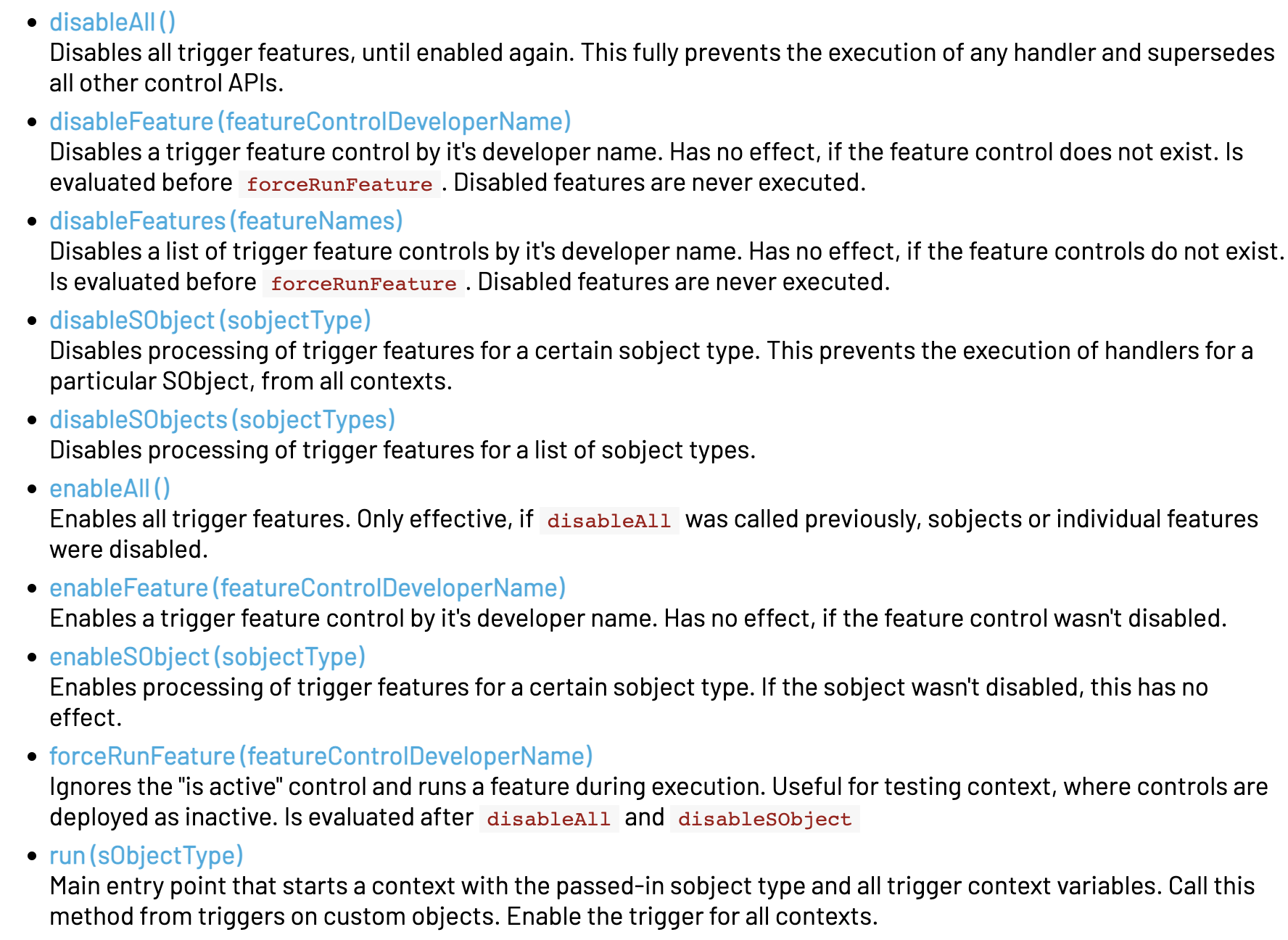

The framework provides some very useful developer APIs that cater to two use cases:

- Disabling resource-intensive functionalities to improve performance in E2E tests

- Disabling data-integrity functionalities to simulate corrupted data in E2E tests

Here is a screenshot of the ApexDox documentation. If you are unsure how to use this API, see my example implementation.

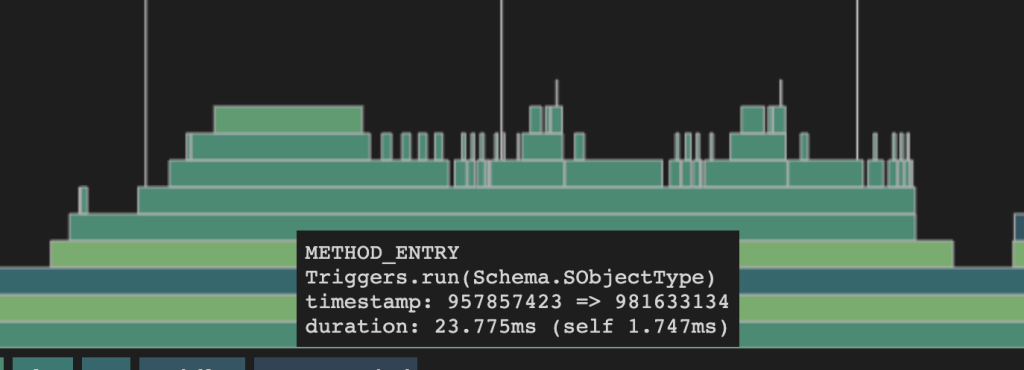

Benchmarking

The framework is optimized for performance. The typical overhead to load handlers is about 20 – 50ms per object. Handlers are cached, so if an object is entered multiple times, the framework does not have to reload the handlers again.

By far the biggest performance gain comes from disabling triggers in fixtures. Everyone who managed a sufficiently large enough Org knows, that complex fixtures often run for 10 or more seconds. Disabling them may make the difference between a stable, future-proof E2E test and flaky tests that constantly fail because of Apex CPU limits.

Summary

Existing frameworks are either overly complex or lack fundamental requirements. That’s why the world needs yet another trigger framework. This framework is designed to be progressive. You can introduce it any time you want and migrate all functionalities at your own pace. For us, migration was very easy and took less than a day per package.

I encourage you to try it out for yourself. I published the full implementation together with documentation and example code here: https://github.com/j-schreiber/js-salesforce-apex-utils.