I recently came across a stone-old question: How many apex triggers per object are a best practice? Is the „single apex trigger per object“ dogma still en vogue after the introduction of SFDX?

Why Are Multiple Apex Triggers Per Object Considered Bad?

A quick research on this topic shows: The salesforce community basically agrees on the „one trigger per object“ dogma. If you dig a little deeper, most of the answers refer to an article that was originally published almost 20 years ago. I have rarely seen reasoning that goes beyond reciting the „cannot guarantee order of execution“-argument.

Go back in time 5 years and that made sense. There was no packaging and most of us were developing on Sandboxes using the web-based IDE. Our orgs were persistent and lasted for months. Only big teams were practicing source driven development with the available proprietary tooling. But like most java-like applications, salesforce deployments were mostly monolithic.

There was no benefit in splitting modules into independently deployable packages. And as a result, there were no drawbacks in having a single monolithic trigger that centralises all logic. But the introduction of SFDX changed that.

So How Many Apex Triggers Per Object Are Good?

Since 2017, we have Scratch Orgs and 2nd generation Packaging. This means we can finally practice DevOps. We automatically build our development environments from source. Consequently, we want our packages and it’s dependencies as lean as possible. The better our dependency management, the faster we can set up our development environments and the easier we can scale our development to work on different modules simultaneously for instance.

And this is where the „one trigger per object“ dogma gets in our way.

Assuming you plan to actually test your domain layer properly, there is no way you can have isolated packages that implement trigger logic on the same object and share a common trigger at the same time. Even if one of the packages would depend on the other, it still couldn’t re-use the same trigger. This was different, when we had one monolithic repository. Back in these days, you could simply make a deployment to add an additional handler to your centralised trigger.

Custom Metadata Trigger Frameworks

The only way to technically solve this, is a variation of a custom metadata-based configurable trigger framework. Such a framework typically implements the trigger (usually executing on all contexts) and calls a dispatcher that checks for registered handlers at run-time. Therefore, all packages implementing a domain layer depend on that framework, implement their handlers and register it with a custom metadata record.

The implementations I’ve seen, usually provide the following benefits:

- Enabling/Disabling handlers similar to how one would enable or disable a flow or workflow rule.

- Defining the order of execution (or priority) of the handler.

- Overriding handler execution settings in test context (disabling triggers for unit tests).

On the other hand, they also have substantial drawbacks:

- Enabling and disabling handlers introduces an additional layer of complexity, similar to feature toggles. This reduces testability and determinism of your code.

- It introduces considerable development overhead and every package must use the framework as a dependency.

- You most likely will implement triggers for all standard objects in the framework or a dependent shared core package. If you do so, you will end up with lots of triggers running in contexts that you don’t need today.

- On the other hand, if you don’t anticipate everything in advance, you will constantly have to add new triggers to your framework / core. This conflicts with the acyclic dependency principle (ADP), since your high-level packages depend on low-level implementation.

To sum it up: In my experience, the overhead of maintaining a trigger framework is not worth the marginal benefits. Especially if we consider what we were trying to achieve in the first place: controlling the order of execution.

How Packaging Changes Everything

The introduction of 2nd generation packaging made the „one trigger“ paradigm obsolete. Your packages —by design— should not know about each other or declare a dependency hierarchy otherwise. In both cases, they cannot modify contents of the other package, they can only depend on it.

The benefits of separating your code into packages (scaling development teams, independent deployments, lower complexity in projects, improved dependency management, etc) by far outweigh the negligible downsides of multiple triggers. You shouldn’t have to worry about order of execution for functionalities that shouldn’t depend on each other in the first place.

Consider this: An unlocked package should be either a library (abstract functionality that is used by other applications) or a application (concrete functionality with business value). Applications, of course, can form a dependency tree. Functionalities of one package should never leak into the sovereign territory of another package.

One Trigger Per Object Per Package Is A Good Idea

If your packages are designed with clear boundaries of scope, it is completely fine to have multiple triggers on the same object. Their functionalities are not supposed to interfere, therefore their order of execution doesn’t matter. Controlling the order of execution across multiple packages is a non-existing problem.

If two functionalities have such a high cohesion, they should probably be located in the same package. On the other hand, if functionalities are located in different packages and do not even depend on each other, how could their order of execution matter?

Why Even Multiple Triggers Per Object Per Package Might Be A Good Idea

To me it makes sense that each package should be managed as an independend application. In the end, they might even have separate development teams. So where does that leave us?

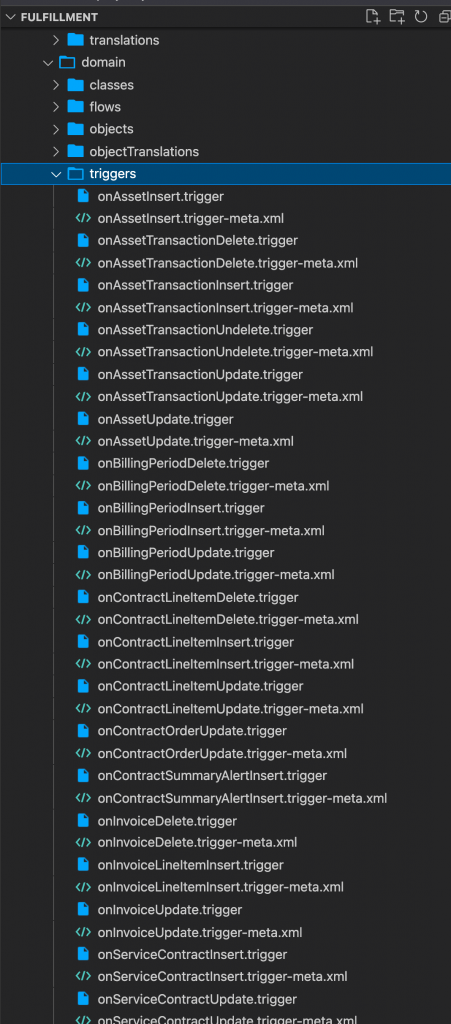

We recently launched a billing application in our Fulfillment package that provides automated billing for subscription products, automatic and manual renewal for contracts as well as advanced revenue forecasting capabilities. The domain layer grew quite complex, and we had a lot of triggers to implement.

Eventually I ended up with a single trigger per transaction scope (insert, update, delete, undelete). I admit the folder looks a little bit bloated, but the triggers are as lean as it gets.

Most triggers look like this and only invoke a single method in their domain class:

trigger onBillingPeriodDelete on BillingPeriod__c(before delete) {

FulfillmentBillingPeriodDomain.preventDeletionForBilledPeriods(Trigger.old);

}Even the more complex business entities only call very few domain methods:

trigger onContractLineItemUpdate on ContractLineItem(before update, after update) {

if (Trigger.isBefore) {

FulfillmentContractLineItemDomain.setFieldsFromProduct(Trigger.new);

} else {

FulfillmentContractLineItemDomain.alignBillingPeriods(Trigger.new, Trigger.oldMap);

FulfillmentContractLineItemDomain.registerPdfRenderings(Trigger.new, Trigger.oldMap);

}

}But the most important part of all this: Each trigger only gets executed when it needs to. The order of execution (as far as it matters) is obvious and clear. It is not hidden in a specific field buried deep somewhere in a custom metadata record.

Summary

I believe, the „one trigger per object“ dogma is outdated. It originated from a time where we didn’t have packages or scratch orgs. But all that changed when we got second generation packages. Today we have to balance the complexity that comes with trigger frameworks and the benefits of independently developing packages.

I came to the conclusion, that at least for medium sized projects (below 200k lines of apex code), the number of triggers per object doesn’t matter. Focussing on a solid understanding of your domain layer (and its boundaries) is much more important than worrying about the number of triggers that are used to implement said domain layer.